The government has amended the IT Rules, 2021, to strengthen online safety and accountability. It introduced Grievance Appellate Committees, required intermediaries to protect users’ rights, and proposed mandatory removal of content flagged “false” by a Fact Check Unit, reshaping digital rights and platform responsibility.

Copyright infringement not intended

Picture Courtesy: ddnews

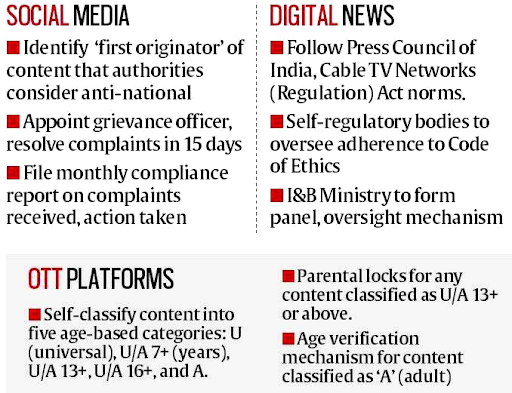

The Ministry of Electronics and Information Technology (MeitY) amended the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules 2021.

Initially notified in February 2021, the IT Rules laid out core obligations for online intermediaries (including social media platforms) under the Information Technology Act, 2000.

The Latest Amendments (October 2025)

To prevent arbitrary content removal and ensure high-level oversight, the latest amendments to Rule 3(1)(d) introduce a three-tiered safeguard:

Regulating AI-Generated Content and Deepfakes (October 2025 Draft Amendments)

Definition of Synthetically Generated Information:

The rules introduce a clear definition for "synthetically generated information" as content (image, video, or audio) created or altered algorithmically to appear authentic.

Mandatory Labelling

Platforms must visibly label AI-generated content. For visual content, the label should cover at least 10% of the screen area, and for audio, an audible identifier must play during the initial 10% of the clip.

User Declaration

Significant Social Media Intermediaries (SSMIs) must require users to declare whether uploaded content is synthetically generated.

Platform Accountability

SSMIs must now use tech and verification to detect and tag deepfakes, increasing user awareness and traceability. Non-compliance risks losing 'safe harbour' protection under IT Act, 2000, Section 79.

Vagueness and Overbreadth

Vague terms like "unlawful information" and "fake, false, or misleading" in legislation could lead to arbitrary enforcement and legal challenges, mirroring the Shreya Singhal vs Union of India (2015) case where Section 66A of the IT Act was struck down for its vagueness.

Potential for Censorship

Critics concern government-mandated content removal and fact-checking, even with senior authorization, could suppress dissent and impact democratic discourse.

Dilution of Safe Harbour Protection

Increased liability and "take-down or lose immunity" clauses pressure intermediaries toward over-censorship to avoid legal issues.

Feasibility of AI Regulation

Mandatory AI content labeling and detection face technical challenges. Maintaining a 10% visible label across various devices and achieving accurate automated detection are complex, and can hinder innovation.

Lack of Independent Oversight

Concerns arose over the independence and impartiality of government-appointed grievance appellate committees and fact-check units.

Way Forward

Holistic Regulation: Address newer technologies like 5G, IoT, cloud computing, metaverse, blockchain, and cryptocurrency.

Reclassification of Intermediaries: Categorize online intermediaries into distinct groups (e.g., social media, online gaming, cloud services) with tailored regulations, moving beyond a "one-size-fits-all" approach.

Strengthened Cybercrime Laws: Criminalize emerging cybercrimes like cyberbullying, identity theft, and doxxing.

Balancing Rights and Responsibilities: Establish a robust framework that respects constitutional rights while ensuring online safety, trust, and accountability.

The 2021 IT Rules have been amended to enhance digital governance, tackle misinformation, deepfakes, and online gaming, and promote transparency and user safety while upholding constitutional rights.

Source: ddnews

|

PRACTICE QUESTION Q. Balancing the fight against misinformation with the preservation of free speech is the central challenge of modern digital governance. Critically analyze. 250 words |

The Ministry of Electronics and Information Technology (MeitY) introduced the IT Rules, 2021, under the Information Technology Act, 2000. These guidelines aim to regulate digital media platforms, including social media companies, streaming services, and digital news publishers, to enhance accountability and ensure online safety.

Deepfakes are a type of synthetic media generated through artificial intelligence (AI) and machine learning. They create highly realistic videos, images, or audio that depict individuals doing or saying things they never did.

Deepfakes are created using deep learning algorithms, specifically Generative Adversarial Networks (GANs). A GAN employs two AIs: a "generator" that produces the fake media and a "discriminator" that identifies it. These AIs continuously train each other through a feedback loop until the generated content becomes indistinguishable from authentic media.

© 2026 iasgyan. All right reserved