The rise of AI-driven deepfakes threatens India’s democracy, security, and social trust, flagged by the World Economic Forum as a top risk. India is responding through the Information Technology Rules, content-labelling advisories, and the proposed Digital India Act. A balanced approach needs risk-based laws, tech safeguards, collaboration, and digital literacy.

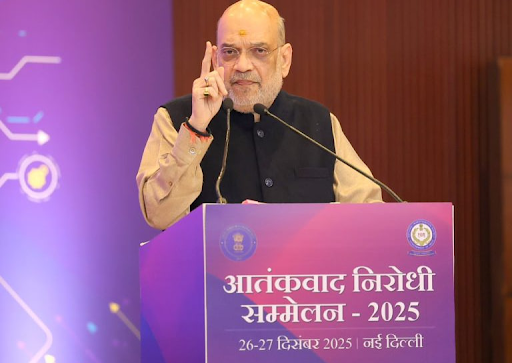

Copyright infringement not intended

Picture Courtesy: LIVEMINT

The proliferation of AI-generated content, particularly deepfakes, has become a significant global concern, threatening societal trust and national security.

Artificial Intelligence (AI)-generated content, also known as synthetic media, refers to text, images, audio, and video created by AI systems like generative models.

Its increasing sophistication and accessibility have deep implications for national security, public trust, and social cohesion.

This content is fundamentally different from traditional media because it democratizes the ability to create highly realistic forgeries, a skill once limited to experts.

Threats to National Security

AI-generated content poses a serious threat to national security, by accelerating the spread of disinformation.

Manipulation and Destabilization: Deepfake technology (video and audio) allows the creation of synthetic media of public figures to manipulate opinion, incite violence, and destabilize governance (e.g., a deepfake of a political leader announcing surrender).

Blurring Reality: The ease of creating synthetic media makes it difficult for both citizens and authorities to distinguish between truth and fiction and respond to genuine threats.

Weaponized Disinformation: Creating false narratives and impersonating officials to undermine democratic processes.

Erosion of Trust (The "Liar's Dividend"): The flood of synthetic media can cause real, verifiable evidence to be dismissed as fake, severely eroding public trust in institutions.

Critical Infrastructure Attacks: Malicious actors can use AI to facilitate disruptions of essential operations (e.g., power grids, transportation systems).

Cybercrime and Financial Fraud

The rise of Generative AI has provided cybercriminals with a powerful new tool, increasing the scale and sophistication of fraudulent activities.

Decline in Trust in Media and Institutions

The inability to distinguish real content from AI-generated fakes leads to widespread skepticism and a decline in public trust.

When people are constantly exposed to hyper-realistic deepfakes, they may begin to doubt the authenticity of all digital content, including verified news from credible sources.

This erosion of a shared factual basis is detrimental to informed public discourse and the functioning of democracy.

Societal Harms and Personal Damage

Beyond politics, AI-generated content inflicts serious personal and social harm. A major concern is the creation of non-consensual explicit content, predominantly targeting women.

Studies show that 98% of deepfake videos are pornographic, and of those, a vast majority use the likeness of women without their consent (Source: Tech Advisors, 2025). Other harms include:

Global governments are struggling to regulate AI-generated content, with diverse approaches reflecting legal and cultural differences, aiming to balance innovation and free speech.

|

Jurisdiction |

Key Regulations and Approach |

|

India |

|

|

European Union |

|

|

United States |

|

Technical Measures

Technology offers the first line of defense, though it is not a complete solution. The field is a constant cat-and-mouse game between content generation and detection.

Policy and Institutional Responses

Effective governance requires clear rules, platform accountability, and collaboration.

Social and Educational Solutions

Building societal resilience is crucial for long-term mitigation.

Mitigating the risks of AI-generated content—from national security threats and fraud to eroding public trust—requires a multi-layered strategy including technical detection, enforceable legal frameworks, platform accountability, and enhanced public digital literacy.

Source: LIVEMINT

|

PRACTICE QUESTION Q. Discuss the social and security implications of AI-generated content in the Indian context. 150 words |

AI-generated content, or synthetic media, is any text, image, audio, or video created by AI. Deepfakes are a specific type, referring to hyper-realistic videos or audio where a person's likeness is digitally altered or replaced, making it extremely difficult to distinguish from authentic content.

Deepfakes can be weaponized by hostile state and non-state actors to spread disinformation, manipulate public opinion during elections, incite social unrest, and conduct psychological warfare, thereby directly threatening a nation's security and stability.

The Digital India Act is a proposed legislative framework intended to replace the outdated IT Act, 2000. It is expected to include specific provisions for regulating high-risk AI systems, penalizing platforms that fail to curb harmful content, and reforming the "safe harbour" protection for intermediaries.

© 2026 iasgyan. All right reserved