Copyright infringement not intended

Picture Courtesy: INDIAN EXPRESS

The Ministry of Electronics and Information Technology (MeitY) recently proposed draft amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, mandating clear labelling of AI-generated content on social media platforms.

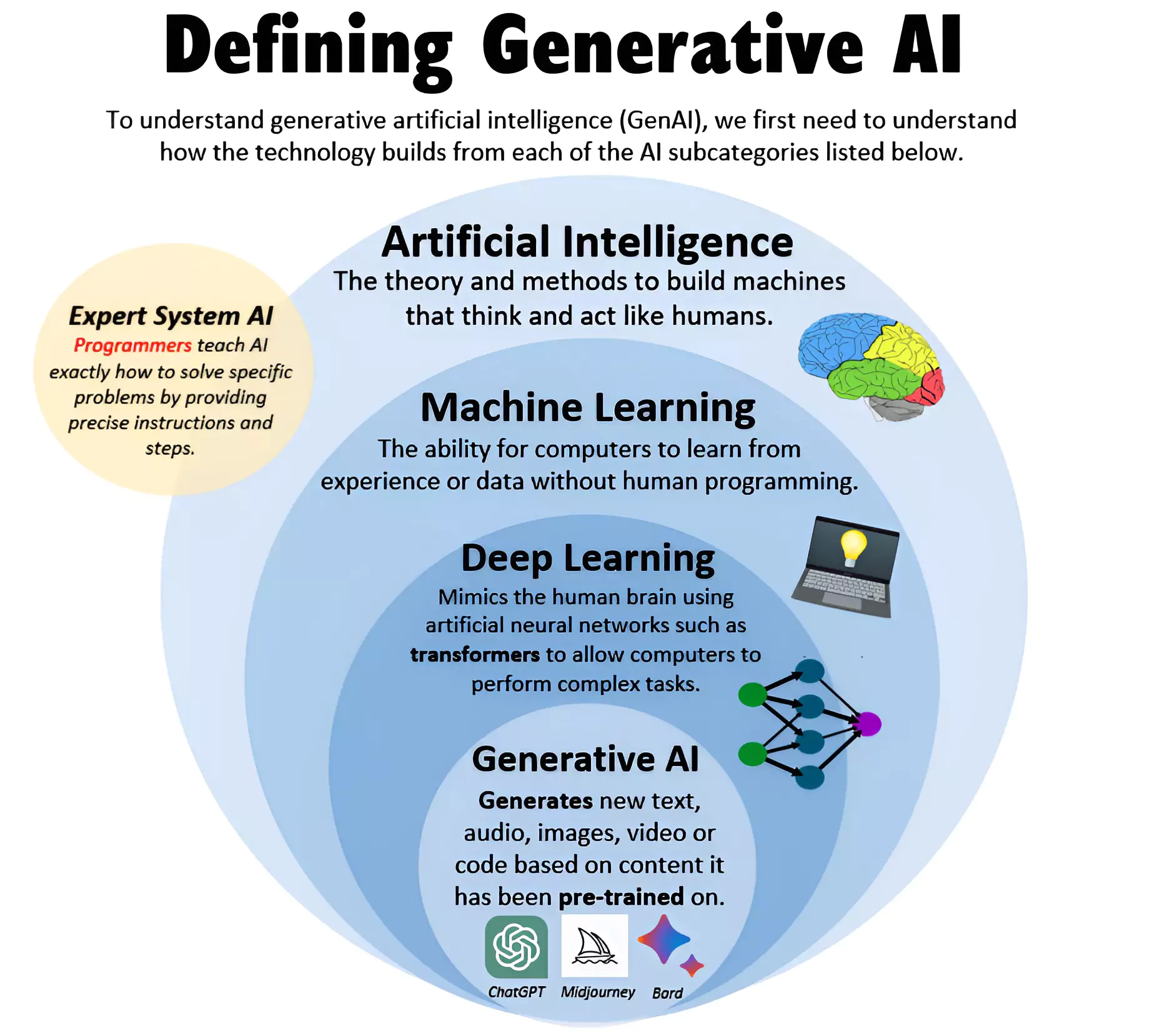

AI-generated content refers to any media (text, audio, images, videos) created by artificial intelligence tools, which utilize machine learning to analyze datasets, identify patterns, and produce original content.

The expansion of generative AI tools has led to an increase in "synthetically generated information" (deepfakes), which blurs the line between reality and fiction and presents significant risks.

Why is mandatory labelling for AI-generated content necessary?

Why is mandatory labelling for AI-generated content necessary?Combating Deepfakes and Misinformation

Deepfakes endanger public opinion, reputations, and democracies through misinformation, and risk justice via evidence tampering and wrongful convictions.

Preventing Fraud and Impersonation

AI-generated content poses significant risks, including identity theft, financial scams, and social engineering attacks like deepfake phishing. A notable example is the KBC (Kaun Banega Crorepati) scam, which fraudulently used Amitabh Bachchan's image and voice.

Protecting Individuals and Reputation

Deepfakes disproportionately target women, resulting in non-consensual explicit content, harassment, and severe psychological and reputational damage.

Ensuring Transparency and Trust

Transparent and clear labeling of content, distinguishing between human and machine generation, is essential for empowering users to make informed choices and promoting trust in platforms.

Upholding Democratic Integrity

The World Economic Forum's Global Risks Report 2024 highlights unlabeled AI-generated content as a critical global concern because it poses a significant election risk by spreading propaganda and misinformation.

Addressing National Security Risk

Anti-national forces could use deepfakes for spreading propaganda or terrorist recruitment.

Dual Responsibility

The proposed rules place dual responsibility on both users and platforms.

User Self-Declaration

Users must self-declare whether the information or content they upload is AI-generated or synthetically modified.

Platform Accountability

Significant Social Media Intermediaries (SSMIs) must use automated tools to verify user declarations. If users don't declare, the platforms are then responsible for proactively detecting and labeling the content.

Mandatory Labelling and Metadata

Scope

The rules apply only to publicly shared content, not private or unpublished material.

Consequences of Non-Compliance

Non-compliant platforms risk losing "safe harbour" protection under Section 79 of the IT Act, which shields online intermediaries from liability for third-party content.

Centralized Takedown Notices

MeitY amended IT rules to centralize content takedown notices, requiring issuance by senior central government officials (Joint Secretary or higher) or DIG/above for police bodies.

Timeline

The draft is open for public and industry feedback until November 6, 2025.

European Union (EU)

The EU AI Act implements a risk-based framework with specific disclosure requirements for generative AI.

It requires that AI-generated content be clearly identifiable, and that deepfakes and text released for public interest be visibly labeled.

China

Deep Synthesis Regulation mandates labeling, provenance tracking, and user consent for deepfakes and other generative content.

United States (US)

While there is no comprehensive federal AI law, the Federal Trade Commission (FTC) enforces against deceptive AI practices.

Other Platforms/Initiatives

Platforms like YouTube, Meta, and TikTok are implementing their own AI labelling tools and policies, requiring creators to disclose AI alterations in videos and offering manual or automatic labelling options.

Enhanced User Awareness and Informed Decisions

Labels empower users to understand the origin of content, helping them evaluate its reliability.

Increased Transparency and Trust

Openly disclosing AI use builds trust between users and content creators or platforms, demonstrating a commitment to honesty.

Mitigation of Misinformation and Harm

By clearly distinguishing synthetic content, labels help reduce the spread of misleading information, deepfakes, and potentially harmful content.

Promoting Accountability

Clear disclosure promotes accountability in AI development and deployment, making it easier to trace and address the source of harmful AI-generated content.

Ethical Responsibility and Compliance

Labelling aligns with ethical responsibilities to inform users and prevent deception. It also ensures compliance with evolving legal and ethical standards for AI usage.

Legal Protection

Accurate labeling of AI-generated content can shield businesses from legal repercussions stemming from inaccuracies or biases, by clearly demonstrating the automated origin of the material.

Technical Detection Challenges

AI content detection is imperfect, prone to both false positives (misidentifying human content) and false negatives (missing AI content).

Ease of Removal

Watermarks and labels are easily removed by malicious actors using unregulated or open-source AI, allowing them to evade detection.

Lack of Standardization

Monitoring and verifying social media content is challenging due to the absence of uniform technical standards for media sources and consistent cross-platform verification methods.

Operational Burden for Platforms

Requiring platforms to obtain user declarations and deploy verification measures adds operational complexity and compliance costs.

Balancing Freedom of Speech

Strict content regulation, even with good intentions, can raise concerns about censorship and potential restrictions on legitimate artistic, satirical, or creative uses of synthetic media.

"Implied Truth" Effect and "Tainted Truth" Effect

Labeling only some AI content risks making unlabelled false AI content seem more credible, and mislabeling human content as AI can reduce its trustworthiness.

Develop Robust Detection Technologies

Invest in research and development of advanced AI detection tools with higher accuracy and lower false positive/negative rates, possibly involving Indian Computer Emergency Response Team (CERT-In) as a coordinator for monitoring and alerts.

Standardized Technical Framework

Collaborate with industry stakeholders and international bodies to establish uniform technical standards for embedding metadata and watermarks that are difficult to remove or alter.

Promote Media Literacy and Public Awareness

Launch comprehensive public awareness campaigns and media literacy programs in schools and communities to educate citizens about deepfakes, and AI-generated content.

Inter-Ministerial Coordination

Establish an inter-ministerial committee or technical advisory body within MeitY to coordinate AI governance, assess risks, engage with industry, and identify regulatory gaps.

Stakeholder Engagement

Maintain ongoing consultation with AI developers, social media platforms, civil society organizations, and legal experts to refine regulations that balance user protection, innovation, and freedom of expression.

Harmonize with Global Standards

Engage in international forums like the Global Partnership on Artificial Intelligence (GPAI) to align India's AI regulations with global best practices and promote cross-border cooperation on content moderation.

Strengthen Legal Frameworks

Robust criminal provisions and comprehensive AI laws are essential to address the unique challenges posed by AI deepfakes.

Accountability and Traceability

Emphasize non-removable metadata and strong traceability mechanisms to identify the originators of harmful synthetic content.

Support for Victims

Establish clear legal paths and psychological support mechanisms (like counselling) for victims of deepfakes and AI-generated harassment.

Government proposes mandatory labeling for AI-generated social media content to ensure transparency and combat deepfakes/misinformation. Success requires a multi-faceted strategy involving technology, regulation, coordination, and public education, balancing safety with innovation and rights.

Source: INDIAN EXPRESS

|

PRACTICE QUESTION Q. The rise of 'deepfakes' and AI-generated misinformation poses a grave threat to social harmony and national security. Discuss. 250 words |

AI-generated content refers to any text, images, videos, audio, or other media created or assisted by artificial intelligence tools, such as large language models (LLMs). These tools use machine learning and deep learning to generate new material based on patterns they have learned from vast amounts of existing data.

Copyright law regarding AI is still evolving and varies by country. Generally, a human author is required for copyright protection. In the U.S., works generated solely by AI are not copyrightable. However, if a human provides significant creative input and control over the AI's output, they may own the copyright.

The DPDP Act impacts AI content by regulating the processing of digital personal data. Since AI models require vast amounts of data for training and function, they must comply with the Act's provisions regarding consent and data security. Violations, particularly those involving sensitive data, can result in heavy penalties.

© 2026 iasgyan. All right reserved