MeitY’s new AI Governance Guidelines establish a responsible framework for India’s tech growth, emphasizing trust, safety, and inclusivity. Adopting a risk-based, flexible approach, they ensure accountability across the AI lifecycle, safeguarding digital infrastructure while positioning India as a global leader in ethical, innovation-driven AI governance.

Copyright infringement not intended

Picture Courtesy: PIB

The Ministry of Electronics and Information Technology (MeitY) released the “India AI Governance Guidelines” to ensure AI is adopted safely, inclusively, and responsibly across sectors.

|

Read all about: BASICS OF ARTIFICIAL INTELLIGENCE l ARTIFICIAL GENERAL INTELLIGENCE (AGI) l AI REGULATION ACROSS THE WORLD l DIFFERENT APPROACHES TO AI REGULATION | |

Mitigating Risks

Without regulation, AI can perpetuate bias, compromise privacy, and raise accountability issues. For example, AI-driven loan approvals could unintentionally discriminate against certain groups, violating Article 14 of the Constitution.

Unlocking Economic Potential

NITI Aayog projects that India's GDP could increase by $500-600 billion by 2035 with the rapid integration of AI across sectors. Achieving this growth requires effective governance to build trust and attract responsible investments.

Promoting Inclusive Growth – “AI for All”

Government plans to leverage AI for inclusive development and to reduce social and economic inequalities, aligning with NITI Aayog's 2018 National Strategy for Artificial Intelligence.

Strengthening Global Leadership

As a founding member and lead chair of the Global Partnership on Artificial Intelligence (GPAI), India is dedicated to ethical and human-centric AI and aims to shape global conversations on this subject.

Seven Guiding Principles (Sutras)

Trust as the Foundation: Build public confidence to encourage safe innovation and adoption.

People First: Prioritize human-centric design, oversight, and empowerment to ensure AI complements human judgment.

Innovation over Restraint: Encourage responsible experimentation while avoiding excessive regulation.

Fairness and Equity: Develop inclusive AI systems that identify and eliminate bias.

Accountability: Define clear responsibility for AI decisions and outcomes throughout the value chain.

Understandable by Design: Mandate transparency and explainability so users and regulators can interpret AI-driven decisions.

Safety, Resilience, and Sustainability: Ensure AI systems are secure, reliable, and contribute to long-term social and environmental goals.

Pillars of Governance and Institutional Structure

Guidelines organize AI governance around six key pillars: Infrastructure, Capacity Building, Policy & Regulation, Risk Mitigation, Accountability, and Institutions.

MeitY proposes a multi-tier institutional setup

AI Governance Group (AIGG): A high-level policy coordination body.

Technology & Policy Expert Committee (TPEC): Offers technical and strategic guidance.

AI Safety Institute (AISI): Conducts risk assessments, develops safety standards, and promotes global collaboration.

Sector-Specific Regulators: Domain regulators (like RBI for fintech) ensure compliance within their respective sectors.

Leveraging Existing Legal Frameworks

Integrates AI governance into existing laws rather than enacting a standalone AI Act immediately.

This "techno-legal" approach integrates compliance directly into technology systems, guaranteeing that safeguards are inherently "automatic by design."

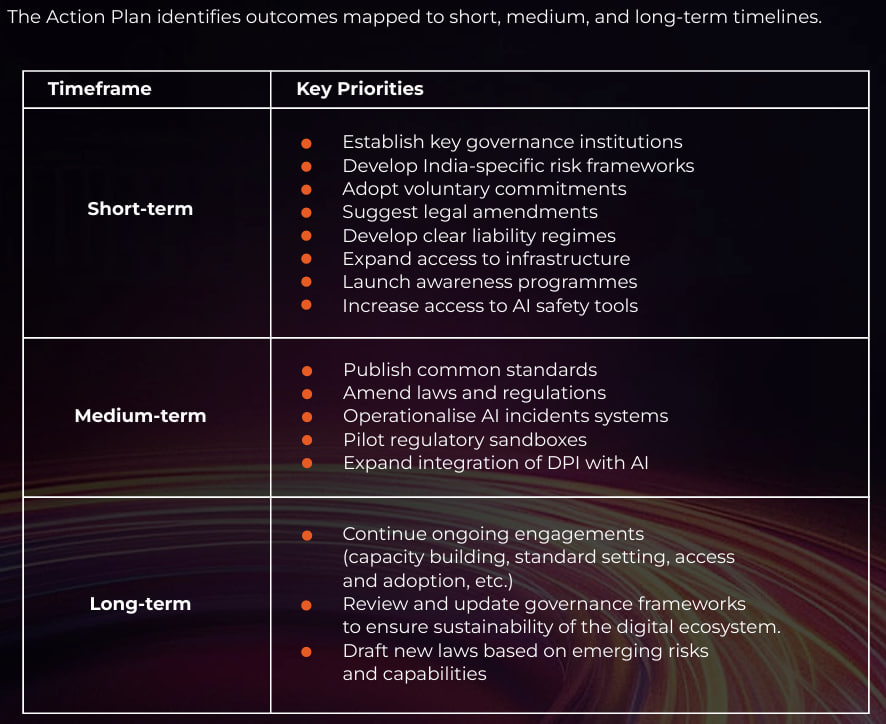

Action Plan

Short-term: Build AI database, expand GPU/data access, and launch awareness programs.

Medium-term: Publish standards, run regulatory sandboxes, and amend outdated laws.

Long-term: Introduce new laws for emerging risks, ensure continuous oversight, and strengthen India’s global leadership in AI governance.

Practical Guidelines

Practical Guidelines

For Industry: Follow laws, publish transparency reports, use techno-legal safeguards, and create grievance mechanisms.

For Regulators: Keep frameworks flexible, encourage innovation, avoid over-regulation, and promote compliance-by-design.

The AI Governance Guidelines, based on the Seven Sutras, establishes a principle-driven, "light-touch" regulatory approach to balance innovation with ethical responsibility, promoting human-centric values, inclusivity, and accountability to support its Viksit Bharat 2047 vision.

Source: PIB

|

PRACTICE QUESTION Q. How can India's AI governance framework effectively balance promoting innovation with mitigating socio-ethical risks? |

They are a principle-based framework to promote responsible, ethical, and innovative AI development and deployment in India, balancing innovation with risk mitigation and inclusivity.

Approved in March 2024 with a budget of over ₹10,300 crore, the IndiaAI Mission is a strategic initiative by the government to establish a robust and inclusive AI ecosystem. It is built on seven pillars, including AI compute capacity, an innovation center, data platform (AIKosh), application development, future skills, startup financing, and safe and trusted AI.

It is an AI-powered language translation platform designed to break language barriers and enable access to digital services and content in all 22 scheduled Indian languages. It uses over 350 AI language models for speech-to-speech translation, text-to-speech, and more.

© 2026 iasgyan. All right reserved