Generative AI threatens the Right to Privacy by collecting and processing personal data without clear consent. It increases risks of data leaks, inference-based profiling, and deepfakes. India’s DPDP Act, 2023 relies on consent, but AI’s complexity and broad public-data exemptions weaken its protective impact.

Copyright infringement not intended

Picture Courtesy: INDIAN EXPRESS

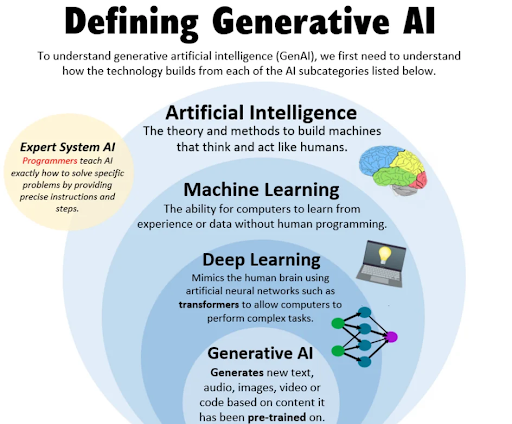

Generative Artificial Intelligence (GenAI) creates new, original, human-like content (text, images, code, audio), offers major opportunities but raises concerns about data privacy, national security, and digital sovereignty.

|

Read all about: Generative AI (GenAI) l Generative AI vs Copyright Law |

GenAI models generate new content from prompts by identifying patterns learned from datasets.

Modern GenAI systems (ChatGPT, Gemini, Claude) use the Transformer architecture for effective context and relationship understanding.

Gen AI is expected to contribute an additional $359-438 billion to India’s GDP by 2030, accounting for a 5.9-7.2% increase in economic growth. (Source: indiaai)

Inference Risk (Sensitive Information Leakage)

AI models may unintentionally leak highly sensitive information, national policy directions, strategic priorities, or security vulnerabilities from user prompts.

Data Privacy and Transparency

Platforms' lack of data transparency (storage, tracking, usage) for users raises privacy risks, especially when linked to personal details like verified phone numbers.

Strategic Dependence on External Ecosystems

Over-reliance on foreign GenAI services contradicts India's goals of data localisation and Atmanirbharta in technology, risking national data falling under external control.

Lack of Uniform Policy and Safeguards

Lack of a unified, government-wide policy led to inconsistent, fragmented implementation across ministries.

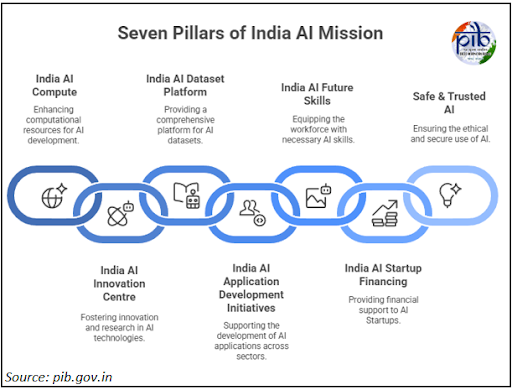

The IndiaAI Mission

The Union Cabinet approved the IndiaAI Mission in 2024 with a total budget of ₹10,372 crore for a period of five years, to build a comprehensive AI ecosystem in the country.

|

Pillar of IndiaAI Mission |

Objectives |

|

Compute Capacity |

Build a high-end, scalable AI computing infrastructure with at least 10,000 GPUs through public-private partnerships. |

|

Innovation Centre |

Develop and deploy indigenous Large Language Models (LLMs) and domain-specific foundational models, particularly for Indian languages. |

|

Datasets Platform |

Create a unified platform to provide researchers and startups with access to high-quality, non-personal datasets. |

|

Application Development |

Promote AI applications in critical sectors like health, agriculture, and education. |

|

FutureSkills |

Expand AI education by offering undergraduate, postgraduate, and Ph.D. level courses in AI. |

|

Startup Financing |

Provide financial support to deep-tech AI startups to foster innovation. |

|

Safe & Trusted AI |

Establish a framework for responsible AI, including ethical guidelines, and develop tools for ensuring AI safety. |

Regulatory and Governance Framework

Its principles of lawful purpose, data minimisation, and purpose limitation apply to data processed by AI systems, ensuring accountability and user consent.

AI Governance Guidelines

The Ministry of Electronics and Information Technology (MeitY) recommended creating an India-specific AI Risk Assessment Framework to regulate the AI sector through existing laws rather than enacting a separate AI-specific law.

Strategic Measures for Digital Sovereignty

Restrictions on Foreign AI Tools

Government departments, including the Ministry of Finance, have issued directives restricting the use of external GenAI tools like ChatGPT on official devices to protect sensitive data.

Promoting Indigenous Platforms

Officials are urged to prioritize 'swadeshi' digital tools, such as Zoho Office Suite, and government networks to strengthen data security.

Global Regulatory Landscape: India vs EU

|

|

India's Approach |

|

|

Core Philosophy |

Adaptive, principles-based AI regulation focuses on user harm under existing laws like the DPDP and IT Acts. |

Comprehensive, risk-based legislation. Categorizes AI systems into unacceptable, high, limited, and minimal risk categories. |

|

Legal Instrument |

Uses existing legal frameworks (DPDP Act, 2023; IT Act, 2000) and provides guidelines/advisories. |

A dedicated law “EU AI Act”, world's first comprehensive AI law. |

|

Key Focus |

Promoting innovation via the IndiaAI Mission while ensuring user safety, data protection, and digital sovereignty. |

Protecting fundamental rights, safety, and democracy. Imposes strict obligations on high-risk AI systems. |

|

Prohibited Practices |

Addresses harms like deepfakes and misinformation through IT rules. No blanket bans on specific AI technologies. |

"Unacceptable risk" AI, like government social scoring and real-time public biometric ID (with exceptions), is strictly prohibited. |

Strengthen Indigenous Capabilities

Fast-track the development of Indian LLMs under the IndiaAI Mission to reduce reliance on foreign platforms for governance and public sector applications.

Implement AI Prompt Sanitization

To safeguard data privacy, ensure that all official AI queries are routed through a secure, anonymizing gateway that removes all metadata and user identifiers.

Create National Datasets

National project to create high-quality, privacy-preserved synthetic datasets for training domestic AI models relevant to the Indian context.

Focus on Niche, Domain-Specific LLMs

Instead of competing directly with large global models, India can focus on creating specialized models for strategic sectors like law, Education, healthcare, and agriculture.

Enforce Strict Data Localization

Mandate that all AI platforms operating in India store and process Indian user data locally, in line with the principles of the DPDP Act, 2023.

Generative AI offers economic and governance advantages but poses privacy and security risks. India addresses this through the IndiaAI Mission for local development and the DPDP Act for data protection, aiming for digital sovereignty and self-reliance.

Source: INDIAN EXPRESS

|

PRACTICE QUESTION Q. Discuss the ethical and regulatory challenges in balancing innovation in generative AI with the fundamental right to privacy. 150 words |

Generative AI is a type of artificial intelligence that creates new, original content like text, images, and code. Unlike conventional AI that focuses on analysis, generative AI learns patterns from large datasets to produce novel outputs that mimic human creativity.

Traditional AI performs specific tasks like classification or prediction based on existing rules or data. Generative AI, as a subset of AI, creating new content, generates complete outputs rather than just making a decision or a forecast.

India's AI regulatory framework utilizes existing legislation, namely the Information Technology (IT) Act, 2000 (with the IT Rules, 2021) and the Digital Personal Data Protection (DPDP) Act, 2023, to manage aspects like cybersecurity, data privacy, and intermediary liability.

© 2026 iasgyan. All right reserved