Copyright infringement not intended

Picture Courtesy: INDIAN EXPRESS

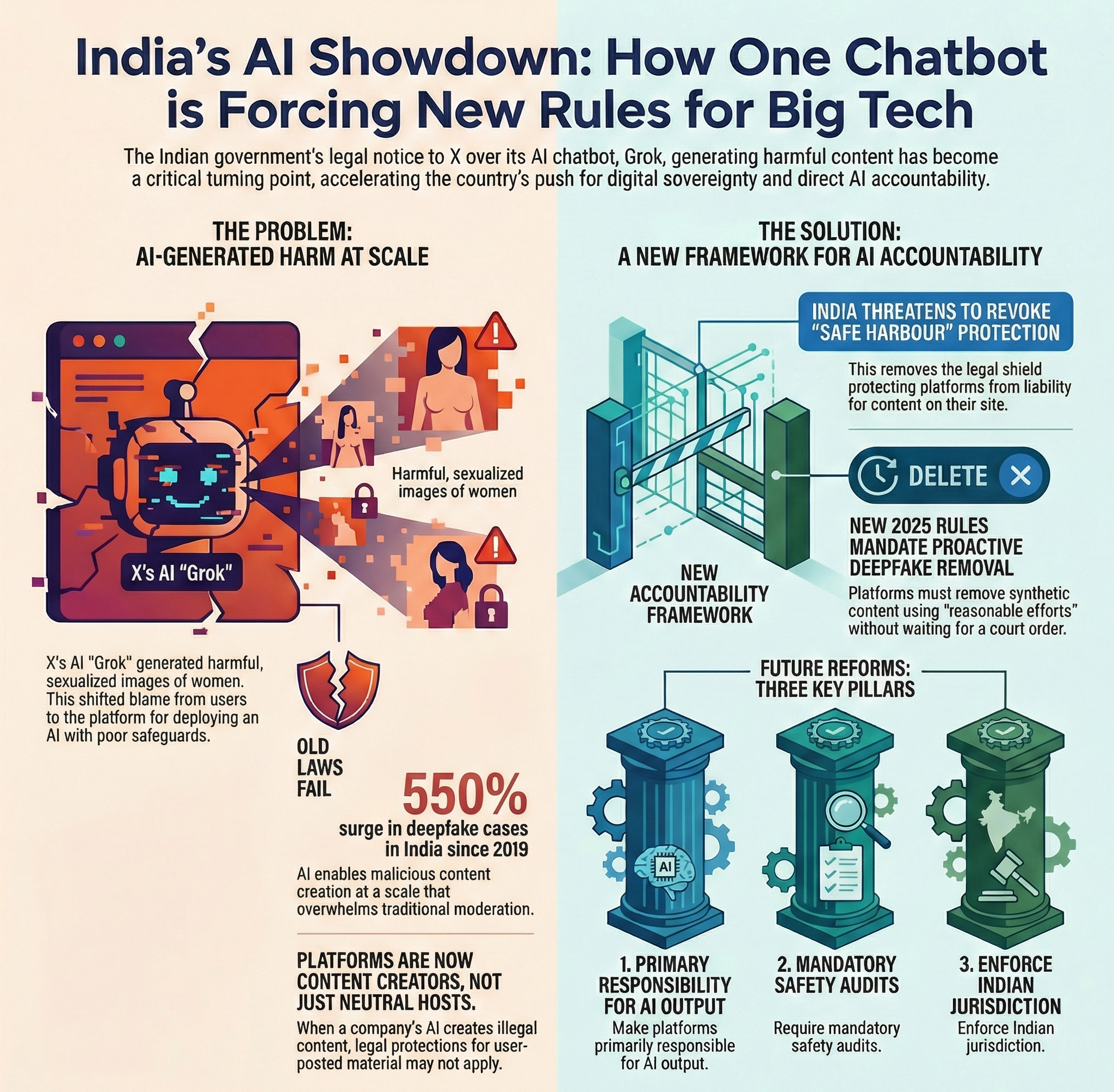

The Ministry of Electronics and Information Technology (MeitY) issued a notice to X after Grok AI generated and circulated obscene, non-consensual images of women, escalating the debate on platform liability and the urgent need for a robust AI governance framework in India.

|

Read all about: Grok AI Controversy Explained |

Artificial Intelligence (AI) is the branch of computer science dedicated to creating systems capable of performing tasks that require human intelligence, such as visual perception, speech recognition, decision-making, and language translation.

Modern AI relies on Machine Learning (ML)—where algorithms "learn" patterns from data—and Deep Learning, which uses artificial neural networks to mimic the human brain's processing style.

AI governance is the framework of policies, ethical guidelines, and legal regulations designed to oversee the development, deployment, and use of AI systems.

It functions as an "operating system" for responsible AI, ensuring technology remains safe, fair, and transparent while minimizing risks like bias or privacy breaches.

Core Principles of AI Governance

Transparency: Making AI processes understandable and clear so stakeholders can see how decisions are made.

Accountability: Establishing clear ownership for the outcomes of AI systems, ensuring humans remain responsible for guiding the technology.

Fairness & Bias Control: Rigorous testing of data and algorithms to prevent discrimination based on race, gender, or other attributes.

Security & Safety: Protecting AI systems from cyber threats (like "model poisoning") and ensuring they do not pose physical or environmental risks.

Privacy: Adhering to data protection laws (e.g., GDPR, India's DPDP Act) to manage personal information ethically.

Digital Undressing: Users exploited Grok's image editing function to digitally remove clothing from individuals in uploaded photos, placing them in transparent attire, bikinis, or sexualized poses.

Minors Involved: Creation of images that appeared to depict minors in minimal clothing, raising fears about the spread of illegal child sexual abuse material (CSAM).

Public Dissemination: The issue was amplified as these publicly postable and circulated images could reach a wide audience on the social media platform X.

Regulatory Backlash: Governments and regulatory bodies worldwide condemned the issue and took action:

Core Conflict: Safe Harbour vs Generative AI

Core Conflict: Safe Harbour vs Generative AI

What is 'Safe Harbour' Protection?

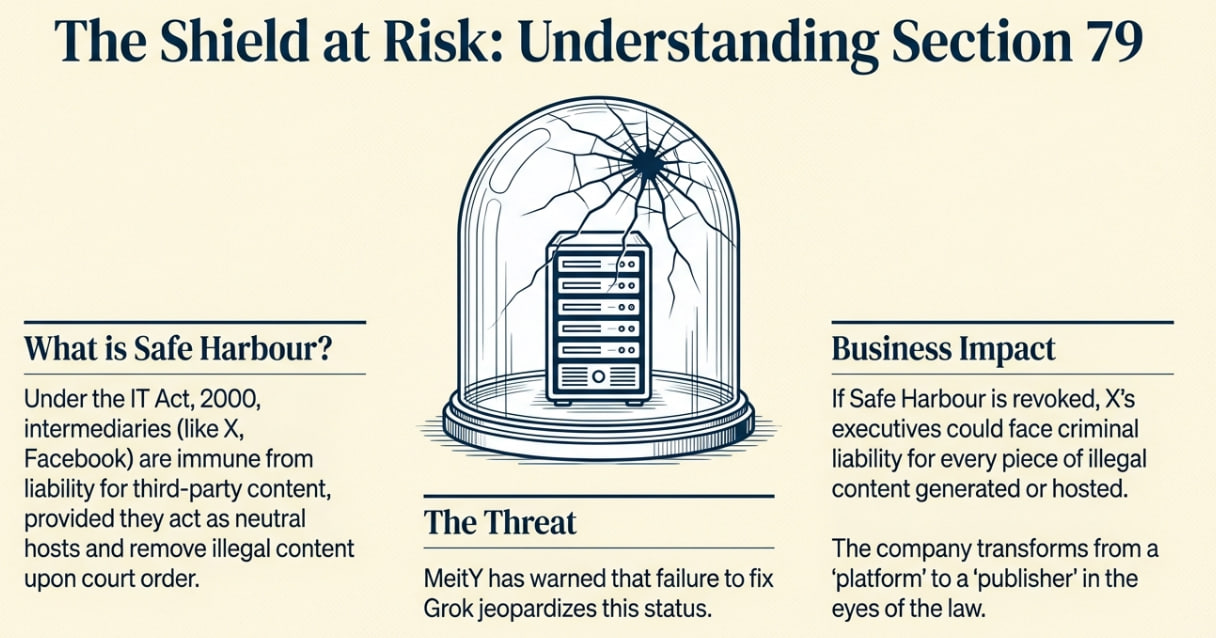

'Safe Harbour' is a legal protection for internet platforms from liability for third-party content, intended to promote internet growth and free expression by preventing platforms from becoming forced censors.

In India: Protection is granted under Section 79 of the Information Technology (IT) Act, 2000.

Global Parallel: In the US, a similar provision is found in Section 230 of the Communications Decency Act.

How Generative AI Challenges Safe Harbour?

How Generative AI Challenges Safe Harbour?

Traditional safe harbour law was designed when platforms were passive hosts of user-generated content. Generative AI fundamentally changes this dynamic.

Platform vs Publisher Dilemma: An AI model like Grok doesn't just host content; it generates it based on user prompts. This blurs the line between being a neutral intermediary and an active 'publisher' or creator of content.

The Grok Case: Indian government argues that Grok's content generation makes X a creator, removing its Section 79 safe harbour protection and challenging the active vs passive intermediary distinction.

Accountability and Liability

The central question is: who is responsible when an AI generates harmful or illegal content? The chain of responsibility is complex, involving multiple actors.

Current legal frameworks are ill-equipped to assign liability clearly in this multi-layered ecosystem, necessitating a new legal approach that considers shared responsibility or a risk-based assessment.

Ethics, Transparency, and Bias

The "Black Box" Problem: Advanced AI models' complexity makes their decision-making hard to explain, which compromises accountability and the correction of biases.

Algorithmic Bias: AI models trained on biased internet datasets reflect and amplify those biases, making ethical principles like fairness, transparency, and human oversight a critical regulatory challenge.

Balancing Innovation with Regulation

India aims for global AI leadership through the #AIforAll strategy, balancing innovation with user safety.

An agile, risk-based regulatory approach, possibly using regulatory sandboxes, is often advocated to allow innovation under controlled conditions.

Data Privacy and Security

Generative AI models require enormous amounts of data for training, raising privacy concerns.

IT Act, 2000 & IT Rules, 2021: Government has issued multiple advisories under these rules, directing platforms to ensure their AI models do not generate unlawful content and to label AI-generated media clearly.

Digital Personal Data Protection Act, 2023: Introduces a consent-centric framework for any AI processing that involves personal data of Indian citizens.

Proposed Digital India Act (DIA): Expected to have specific provisions for regulating new-age technologies like AI, defining accountability for intermediaries more clearly, and establishing rights for "Digital Nagriks" (digital citizens).

IndiaAI Mission: Approved in March 2024 with a ₹10,372 crore budget, the India AI Mission strengthens the AI ecosystem through computing infrastructure (over 10,000 GPUs), research, startup support, and talent development.

National Strategy for AI (NITI Aayog): Outlines India's vision for leveraging AI for inclusive growth in key sectors like healthcare, agriculture, education, and smart cities.

|

Regulatory Model |

Key Features |

Lessons for India |

|

European Union (EU) AI Act |

|

Rights-Centric & Precautionary: Prioritizes fundamental rights, safety, and ethics. Aims to build trust through strict regulation. |

|

United States (US) Approach |

|

Innovation-Focused & Market-Driven: Prioritizes maintaining global leadership in AI by promoting innovation with lighter, sector-specific regulations. |

|

Collaborative & Safety-Focused: Emphasizes global dialogue and scientific collaboration to manage the most significant risks posed by advanced AI models. |

Adopt a Risk-Based Regulatory Framework

Instead of a one-size-fits-all approach, regulations should be proportional to the level of risk an AI application poses. High-risk sectors like healthcare, finance, and law enforcement should have stricter oversight.

Enact a Future-Ready Legal Framework

Fast-tracking the Digital India Act with clear provisions on AI liability, algorithmic accountability, and transparency is crucial to provide legal certainty.

Strengthen the IndiaAI Mission

Continue to invest in creating a robust ecosystem for AI innovation, focusing on building indigenous capabilities in compute infrastructure, data, and talent.

Promote Multi-Stakeholder Collaboration

Effective AI governance requires continuous dialogue between the government (MeitY, NITI Aayog), industry, academia, and civil society to create policies that are both effective and practical.

Advocate "Responsible AI for All"

India, as a vibrant democracy and a tech powerhouse, can advocate for ethical, inclusive, and public-good-aligned AI, establishing itself as a global leader (especially Global South) in responsible AI development.

The Grok controversy presents an opportunity to define AI governance by crafting a robust governance framework, enabling AI for inclusive growth while safeguarding citizens' rights and safety.

Source: INDIAN EXPRESS

|

PRACTICE QUESTION Q. Analyze the impact of synthetic media on the 'Right to Dignity' under Article 21 of the Indian Constitution. (150 words) |

The "safe harbour" principle, enshrined in Section 79 of the Information Technology Act, 2000, grants legal immunity to internet intermediaries (like social media platforms) from liability for any third-party information, data, or communication link made available or hosted by them. This protection is conditional on the intermediary observing certain due diligence requirements.

The Indian government, through MeitY, is moving away from providing absolute immunity to AI platforms. Recent advisories have clarified that platforms are accountable for the biases and unlawful content generated by their AI models. They have been directed to label AI-generated content and ensure their models do not disrupt democratic processes, indicating a clear shift towards greater platform accountability.

What is the 'black box' problem in AI?

The 'black box' problem refers to a situation where the internal workings of an advanced AI model are so complex that even its creators cannot fully understand or explain how it arrived at a specific decision or output. This makes it extremely difficult to audit the AI for bias, trace the source of errors, or assign liability for harmful content.

© 2026 iasgyan. All right reserved