Copyright infringement not intended

Picture Courtesy: INDIAN EXPRESS

Australia banned social media for children under 16 due to mental health concerns, setting a global standard for digital regulation of minors.

Australia enacted the Online Safety Amendment (Social Media Minimum Age) Act 2024, which bans social media access for children under the age of 16.

This legislation places the responsibility of enforcement on tech companies, signaling a global shift from self-regulation to proactive, preventive governance for child online safety.

Why Regulate Children’s Access to Social Media?

Mental Health Protection

Frequent use can lead to anxiety, depression, and low self-esteem due to constant comparison with "idealized" lives. Excessive use may also trigger addictive, dopamine-driven feedback loops.

Child Safety and Exploitation

Safeguard children from online predators, child sexual exploitation and abuse (CSEA), and grooming, as digital platforms are often misused by offenders to contact minors.

Protection from Harmful Content

Children are at risk of encountering harmful online content, including violent images, hate speech, and dangerous viral challenges such as the "Blackout Challenge," which has been associated with accidental deaths.

Brain and Physical Development: Adolescents (ages 10–19) are in a sensitive period of brain development. Excessive screen time is linked to:

Data Privacy

Minors may not understand the long-term implications of sharing personal data. Laws like the US Children's Online Privacy Protection Act (COPPA) and Europe's GDPR restrict how companies collect and monetize children's information.

What Does Australia’s Law Propose?

What Does Australia’s Law Propose?The legislation imposes a clear duty of care on platforms to prevent underage use, shifting the burden away from parents and children.

Mandatory Age Limit: Children under the age of 16 are legally prohibited from creating or maintaining accounts on major social media platforms.

Platform Responsibility: Burden of enforcement lies entirely on the tech companies, not on parents or children. Companies must take "reasonable steps" to identify and deactivate accounts of under-16 users.

Massive Penalties: Social media companies face civil penalties of up to US$33 million for failing to prevent underage users from accessing their services.

No Parental Exceptions: Unlike laws in some other countries, Australian parents cannot provide consent to bypass this age restriction for their children.

Privacy Guardrails: Platforms are prohibited from misusing personal information gathered for age-assurance purposes and must destroy this data once the verification is complete.

Exempted Services

The ban excludes services deemed to have lower risks of algorithmic manipulation or higher educational value:

Australia's model attempts to find a middle ground between the high-surveillance approach of China and the more fragmented, laissez-faire systems in the US and EU.

China’s Model (State-Driven Control)

China employs a highly centralized, state-controlled approach.

Its "minor mode" regulations impose strict time limits, mandate real-name registration, and filter content based on age and socialist values.

While effective in enforcement, this model comes at a high cost to privacy and personal autonomy.

United States Model (Fragmented & Parental-Consent Focused)

The US lacks a single federal law. The primary regulation is the Children's Online Privacy Protection Act (COPPA), which focuses on data collection from children under 13 and requires parental consent.

Recent proposals like the Kids Online Safety Act (KOSA) aim to create a "duty of care" for platforms to mitigate harms like depression and eating disorders but have not yet passed into law.

The approach are fragmented and places a burden on parents.

European Union Model (Risk-Based Regulation)

The EU’s Digital Services Act (DSA) requires platforms to assess and mitigate systemic risks, including those to minors.

It prohibits targeted advertising based on profiling of minors and mandates high-privacy settings by default.

The EU has issued non-binding guidelines to help platforms comply, focusing on "safety by design" rather than a blanket ban.

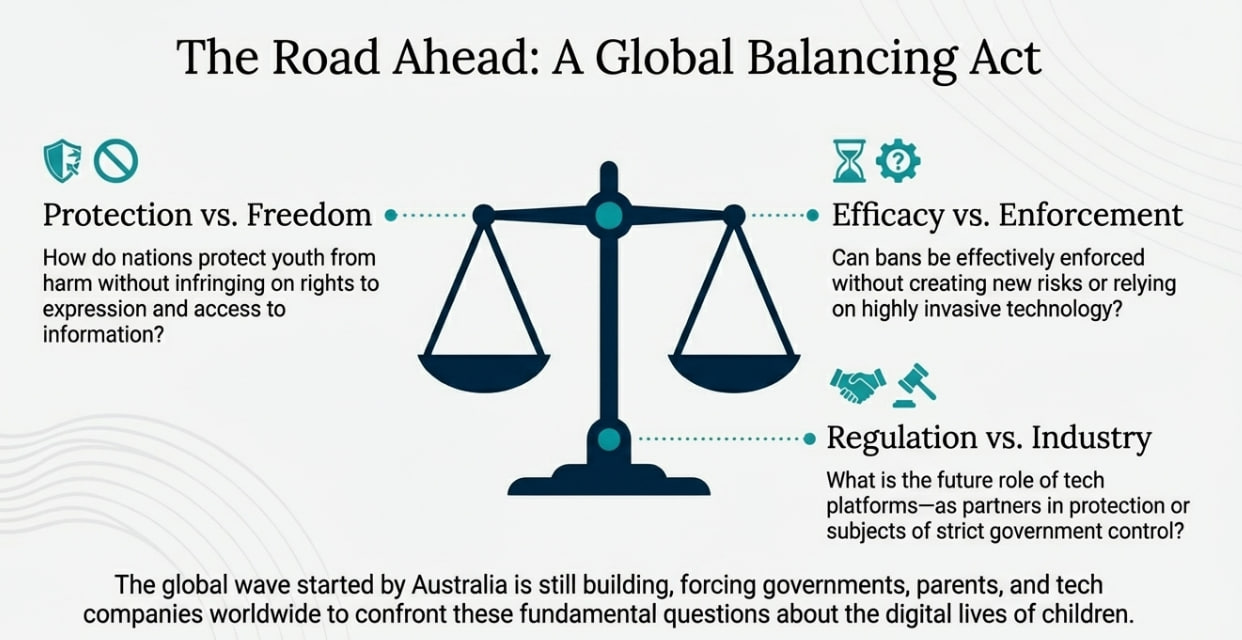

While the Australian model is being watched globally, its success depends on overcoming implementation hurdles and balancing competing rights.

Age Verification vs Privacy: Age verification without compromising user privacy is the core challenge. Mandating government IDs or biometric data creates data security risks for private firms.

Equity and Access: Mandating digital IDs could exclude children who lack official documentation, limiting their access to digital resources.

Effectiveness: Critics argue that tech-savvy teens can easily circumvent the ban using VPNs or by other means, pushing them to less-regulated corners of the internet.

Protection vs Autonomy: The ban ignites a debate between the child's right to protection and their right to autonomy and digital participation.

Lessons for India

Lessons for India

With a massive youth population and rapidly growing internet penetration, India faces similar challenges. Australia’s law offers valuable insights but cannot be a copy-paste solution.

Existing Framework: India’s legal framework includes the IT Rules, 2021, and the Digital Personal Data Protection (DPDP) Act, 2023.

Enforcement Gaps: Despite the rules, enforcement remains a challenge. The focus needs to shift from platform-centric rules to a genuinely child-centric policy framework.

India must adapt, not merely adopt, by strengthening law enforcement, promoting digital literacy widely, and investigating privacy-preserving age assurance technologies.

Australia's social media ban for minors is a bold regulatory experiment, prioritizing preventive online safety by shifting accountability to tech platforms and setting a new global digital standard, despite enforcement and privacy concerns.

Source: INDIAN EXPRESS

|

PRACTICE QUESTION Q. "A blanket ban on social media for minors is a simplistic solution to a complex problem, fraught with challenges of implementation and potential rights violations." Critically examine |

Australia is implementing a law that bans children under the age of 16 from creating or using social media accounts. The law, effective December 2025, places the responsibility on tech companies to enforce this age limit, with non-compliant firms facing fines up to $50 million.

"Safety by Design" is a regulatory strategy that shifts the focus from banning access to making platforms inherently safer. It requires companies to embed safety and privacy features into their products' core design, such as disabling addictive features for minors' accounts and ensuring algorithmic transparency. The EU's Digital Services Act (DSA) is a prime example of this approach.

Critics argue that a blanket ban can limit children's access to positive online resources, such as educational content, social support communities, and opportunities to develop digital literacy. It can be seen as an violation on their rights to information and expression, which must be carefully balanced with the need for protection.

© 2026 iasgyan. All right reserved