India's potential revision of safe harbour laws under the upcoming Digital India Act aims to hold social media platforms more accountable for harmful content, misinformation, and cybercrimes. While enhancing cybersecurity and curbing fake news, it may risk over-censorship and burden smaller platforms with compliance and moderation responsibilities.

Copyright infringement not intended

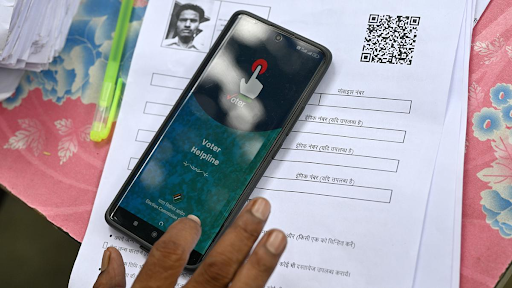

Picture Courtesy: THE HINDU

India may revise safe harbour laws to hold social media liable for harmful content.

It is a legal shield that protects websites, especially social media platforms like X, Facebook, or YouTube, from being held responsible for illegal content posted by their users.

This concept encourages innovation by ensuring platforms aren’t unfairly punished for content they don’t create.

In India, Section 79 of the Information Technology Act, 2000 grants this protection to intermediaries—platforms that host third-party content.

Encourages Free Expression => Platforms can host diverse opinions without fear of constant lawsuits. Without safe harbour, every controversial post could land a platform in court, suppressing open dialogue.

Drives Innovation => By reducing legal risks, safe harbour allows companies to experiment with new features and grow. Internet platforms like Facebook or YouTube succeeded because of this protection.

Prevents Over-Censorship => If platforms faced liability for every post, they might over-censor content to avoid trouble, limiting free speech. Safe harbour strikes a balance, letting platforms moderate content responsibly.

Protects Against Unfair Blame => Social media hosts billions of posts daily. Holding platforms accountable for every user’s actions would be like punishing a landlord for a tenant’s crimes.

However, critics argue safe harbour lets platforms avoid responsibility for harmful content, like misinformation or deepfakes, prompting calls for reform.

It refers to the responsibility of platforms for content posted by users.

Safe harbour; assumes platforms are neutral hosts, not publishers, and shields them from liability unless they ignore illegal content after being notified.

Under the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021. Platforms must:

Slow Response to Takedown Notices => The government claims platforms like X and Facebook delay removing illegal content, undermining public safety.

Rise of Misinformation and Deepfakes => Fake news and AI-generated deepfakes can spread rapidly, provoking violence or fraud.

Cyberfraud Concerns => Cybercrimes are Increasing.The government argues platforms must do more to curb such activities.

Global Precedents => In the U.S., both Joe Biden and Donald Trump have criticized Section 230 (the U.S. equivalent of safe harbour). Biden wants platforms liable for extremist content, while Trump claims they censor conservative voices. India’s review aligns with this global push for stricter regulation.

|

The Ministry of Electronics and Information Technology (MeitY) plans to introduce the Digital India Act (DIA) to revamp safe harbour and impose stricter rules. While no draft has been released, the DIA could redefine how platforms handle content, make them more accountable for misinformation and cybercrimes. |

For Platforms => Stricter rules could force platforms to invest heavily in content moderation, hire more staff, or face lawsuits. Small platforms might struggle, reducing competition.

For Users => Tighter regulations could curb misinformation but risk over-censorship. Activists and comedians fear platforms might remove content to avoid liability, silencing dissent.

For Cybersecurity => Stronger rules might push platforms to tackle deepfakes and cyberfrauds more aggressively, aligning with global cybersecurity priorities.

Must Read Articles:

CODE OF ETHICS ON SOCIAL MEDIA

'VOLUNTARY CODE OF ETHICS' FOR SOCIAL MEDIA PLATFORMS

REGULATING DIGITAL CONTENT: NEED FOR A NEW LEGAL FRAMEWORK

Source:

|

PRACTICE QUESTION Q. Discuss the constitutional and ethical challenges involved in regulating social media platforms. How can a balance be struck between freedom of expression and curbing misinformation? 250 words |

© 2026 iasgyan. All right reserved